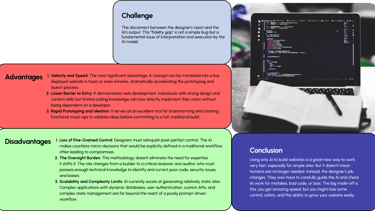

Vibe Coding: An Explorer's Guide to AI-Powered Design Slicing

A UI/UX Designer's Journey from Figma to Functional Code with AI.

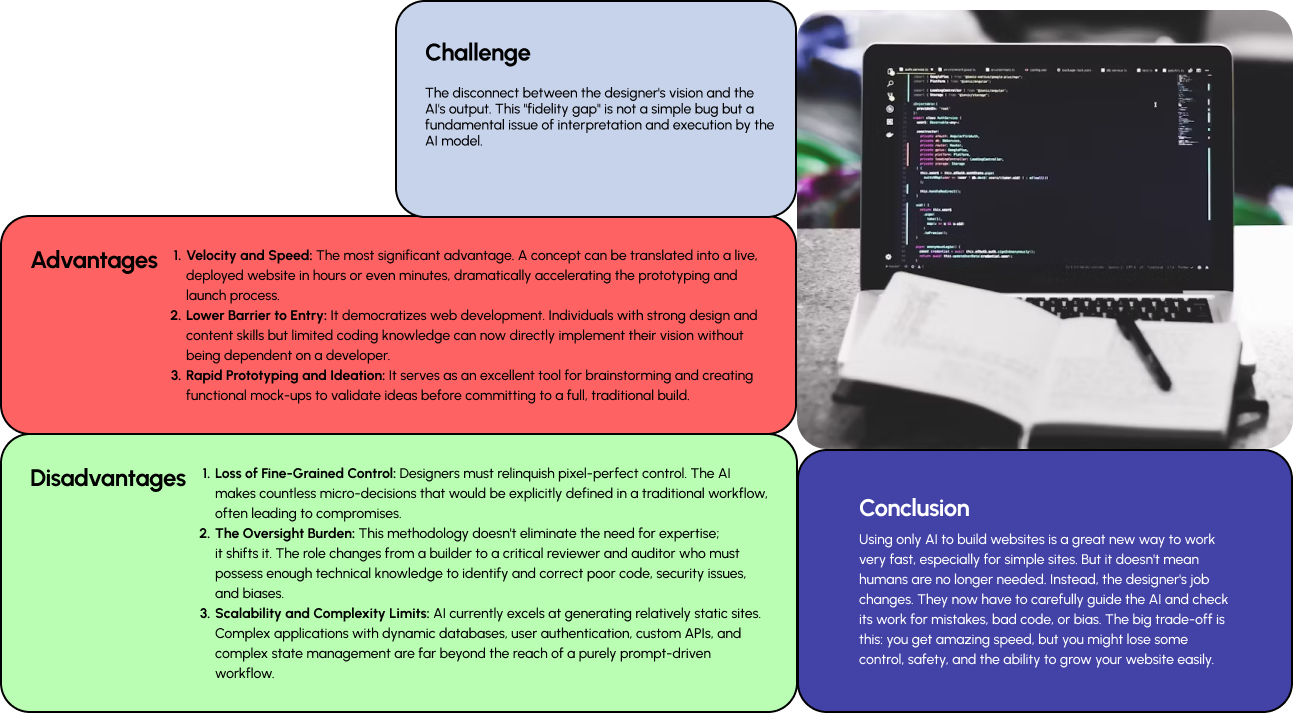

This project, "Vibe Coding," documents my immersive journey as a UI/UX designer adapting to the new frontier of AI-assisted development. It chronicles an exploration into transforming a design vision into functional code, moving beyond traditional slicing methods.

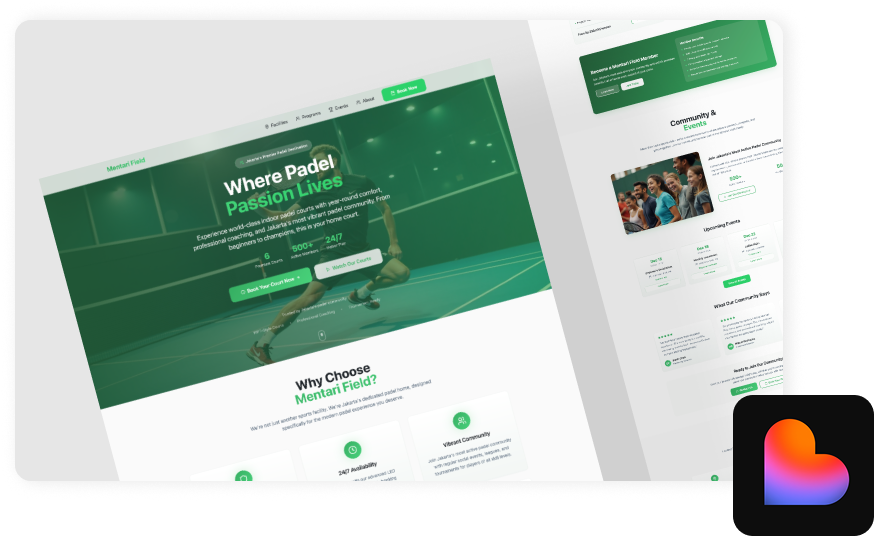

First Case Study: “Mentari Field" Padel Business Website

In my 'Mentari Field' website case study, I implemented a structured prompting technique to translate a design concept into code. This involved defining multiple layers of context for the AI, including the brand's identity and behavioral tone, to ensure the output was precise. This narrative showcases the journey from a Figma design to a validated, AI-generated output, streamlining the path to development.

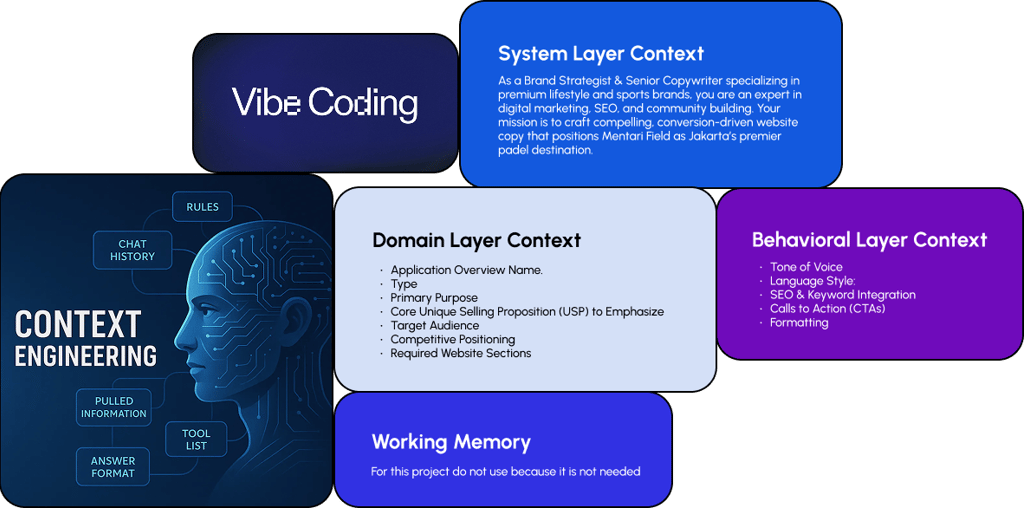

The process of making promt context engineering

By engineering this deep context, I transform the AI from a generic tool into a specialized creative partner that delivers precise, brand-aligned results. This methodology is how designers maintain creative control and architect the AI's understanding in a new era of development.

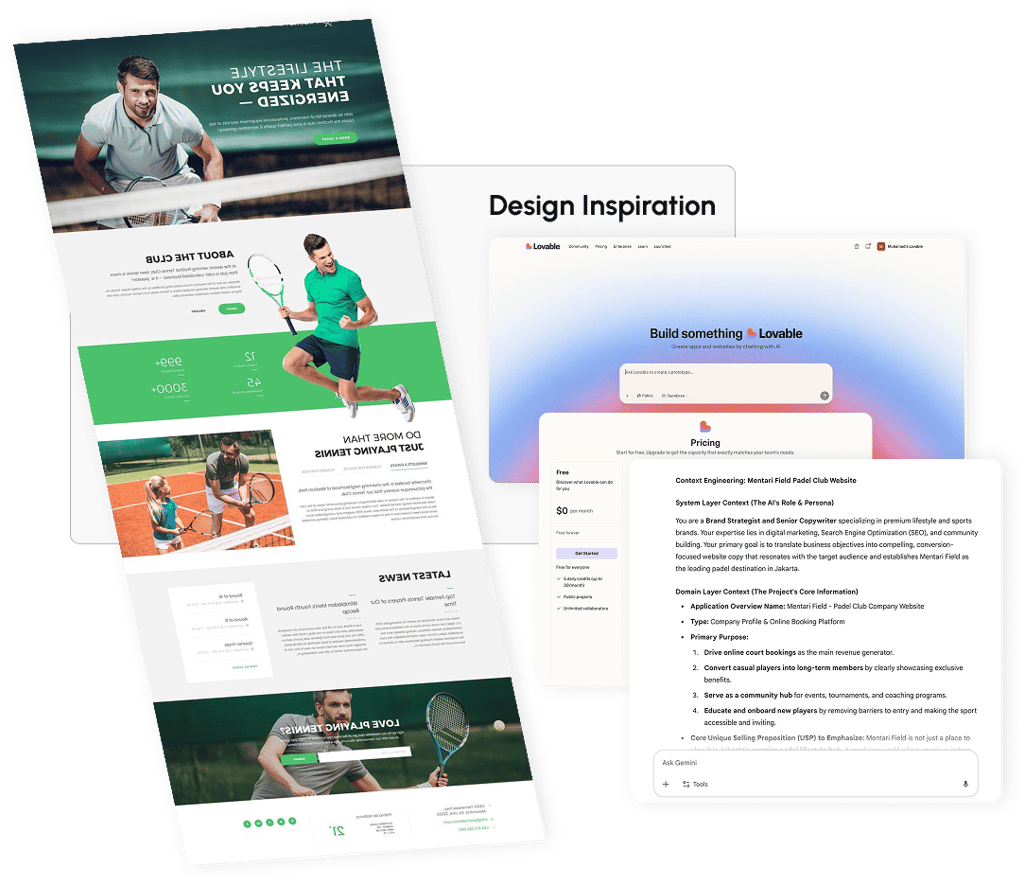

After that make promt with design inspiration extension

My next step is to utilize this detailed, brand-aligned text as a rich prompt for a design inspiration extension. This approach allows me to seamlessly translate the strategic content and energetic tone directly into tangible visual concepts, ensuring the resulting UI mockups are not only aesthetically compelling but also deeply rooted in the brand's voice and conversion goals, effectively streamlining the workflow from copywriting to high-fidelity design.

Next, the vibe code process starts in lovabel.dev

leveraging this AI tool, I can rapidly generate a cohesive set of UI components, color palettes, and typographic styles that are inherently aligned with the project's core identity, dramatically accelerating the path from creative brief to interactive prototype while ensuring brand consistency from the very first line of code.

Notes on using vide coding in lovable.dev

My notes focus on how effectively natural language prompts translate into tangible UI components, the nuances of iterating on a "vibe" to refine details like spacing and hierarchy, and the overall impact on prototyping velocity. This reflection is key to understanding not just the tool's capabilities, but how it reshapes the creative workflow and the very nature of a designer's interaction with the final product.

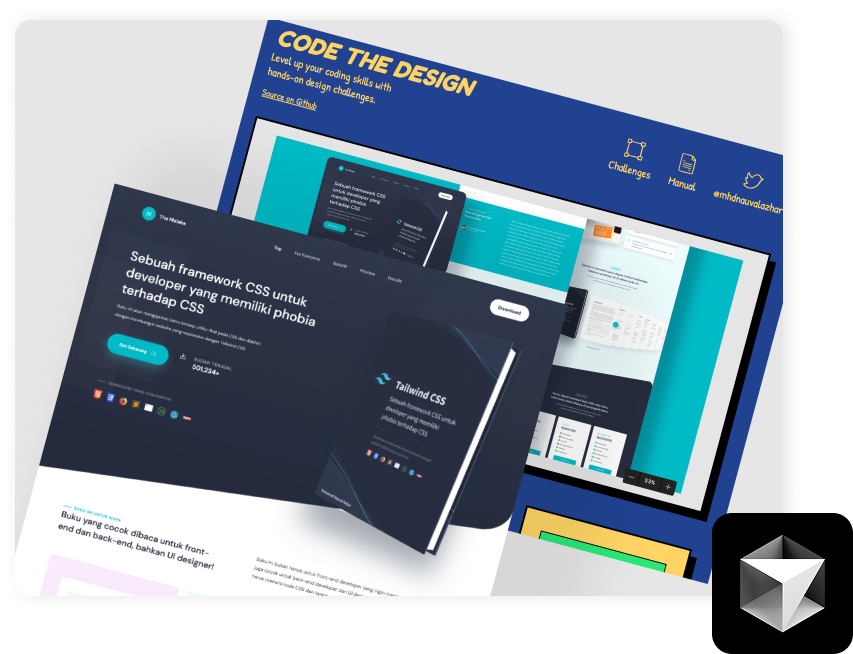

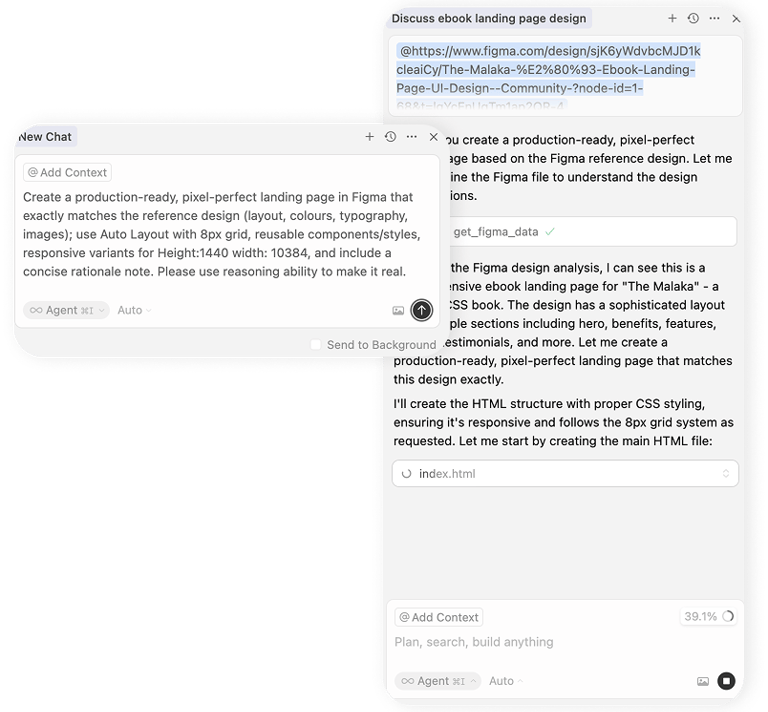

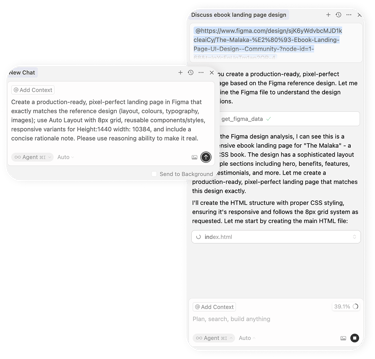

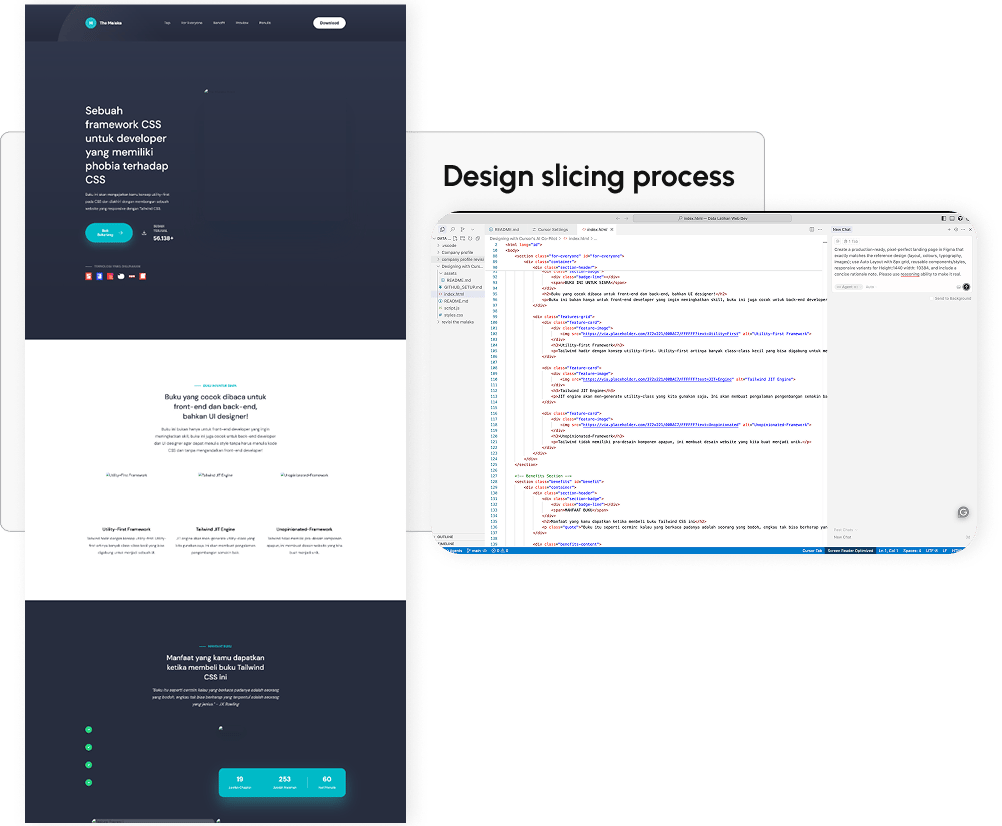

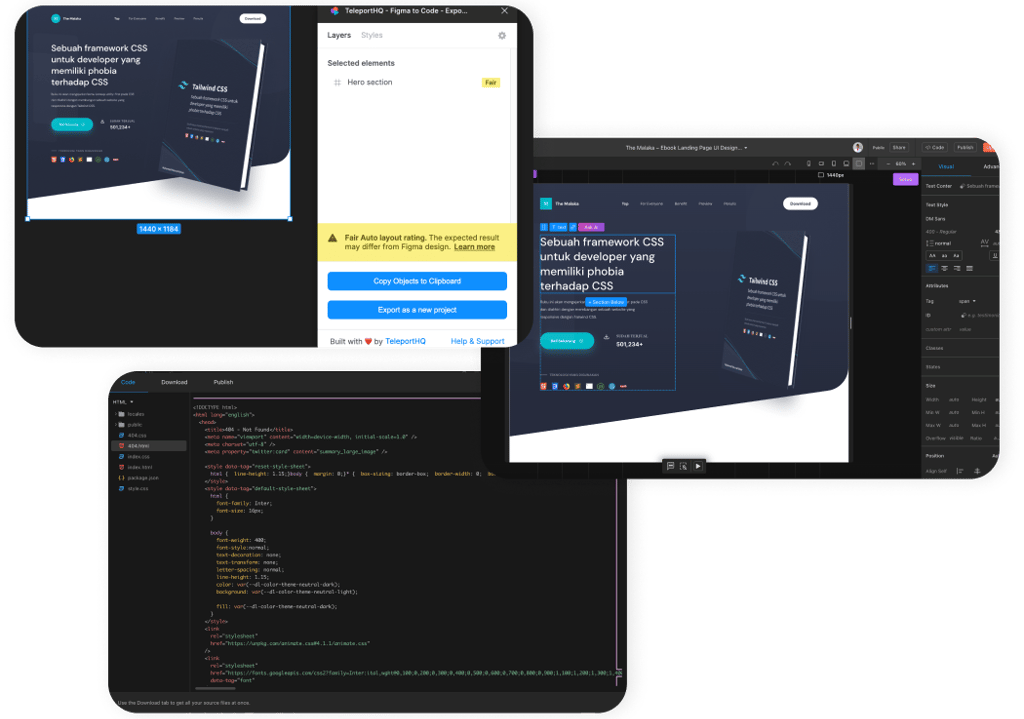

Adapting to AI: A Book Landing Page Case Study with MCP

By applying the MCP (Model Context Protocol) framework, I explored how AI can augment traditional design methodologies, from initial research and ideation to the final high-fidelity prototype, ultimately delivering a compelling and user-centric landing page. This case study showcases a practical application of human-AI collaboration in design and reflects my commitment to staying at the forefront of technological advancements in the field.

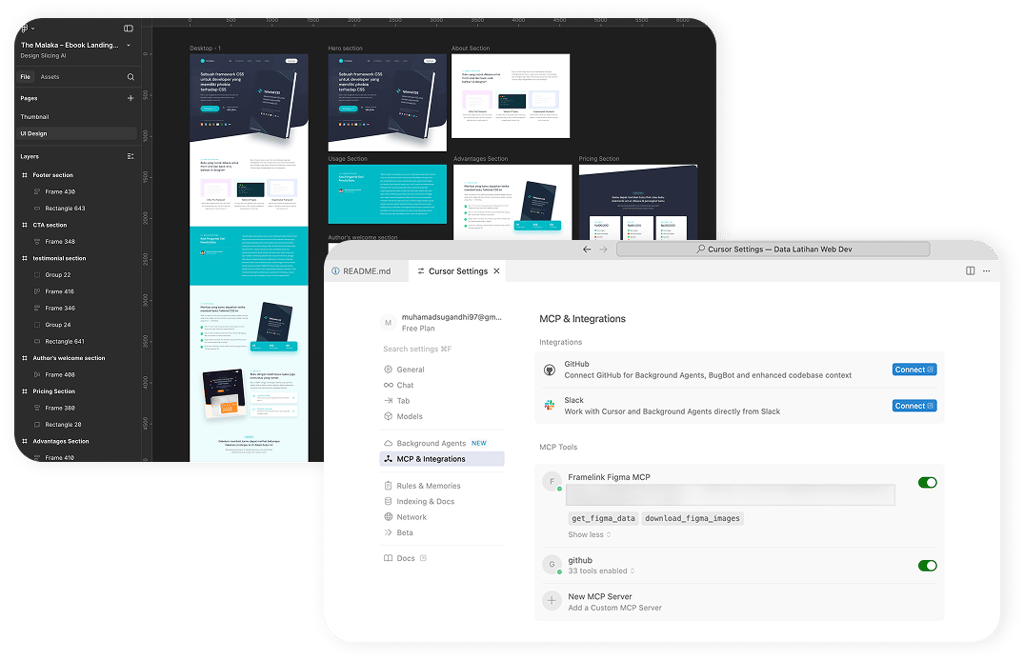

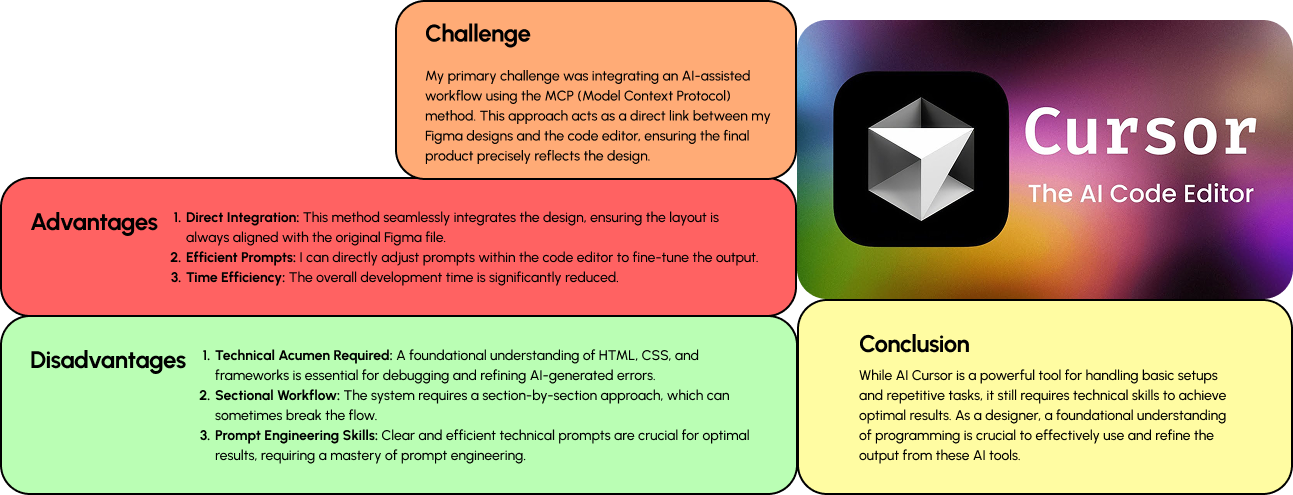

Adapting the Workflow: Linking Figma to MCP Cursor

With MCP, AI does not only read one file or one line of code, but can also understand the entire project structure, dependencies between files, and also more complex instructions. This is more than just a technical exercise; it's about forging a seamless and synergistic relationship between human creativity and AI-driven efficiency. By bridging these two powerful tools, I've unlocked a more streamlined and iterative design process, allowing for faster prototyping and a more dynamic translation of ideas into tangible, user-centric interfaces.

Using MCP to Slice the Connected UI File

I demonstrated how I leveraged the MCP framework using Cursor AI to automate the traditionally labour-intensive process of cutting assets. By applying this intelligent approach to complex, interconnected UI files, I was able to ensure pixel-perfect precision and significantly accelerate asset preparation for the engineering team. This case study highlights my focus on integrating AI not only for conceptual work, but also to streamline the technical, post-design phase, ultimately driving a more efficient and collaborative production cycle.

Adapting the Approach: Early Test Results

These early results are not just a measure of the final product's performance but serve as a critical feedback loop, allowing me to iteratively refine my design process and a testament to my data-driven methodology in navigating the evolving landscape of AI in design.

Precision Iteration: Refining the AI Output

This approach isn't about blindly accepting AI-generated output; instead, it's about using these tools as a dynamic starting point. I feed AI models specific design requirements and constraints, then meticulously refine the generated results. This continuous loop of feedback and adjustment allows me to harness AI's speed and scale while maintaining full control over the aesthetic, usability, and brand integrity of the final product.

Notes on using vide coding in Cursor Ai

This section captures my working notes on "vibe coding" a personal methodology I'm developing for translating abstract design aesthetics and interaction "feel" directly into functional code using Cursor AI. As a UI/UX designer, my primary goal is to ensure a product evokes the intended emotion.

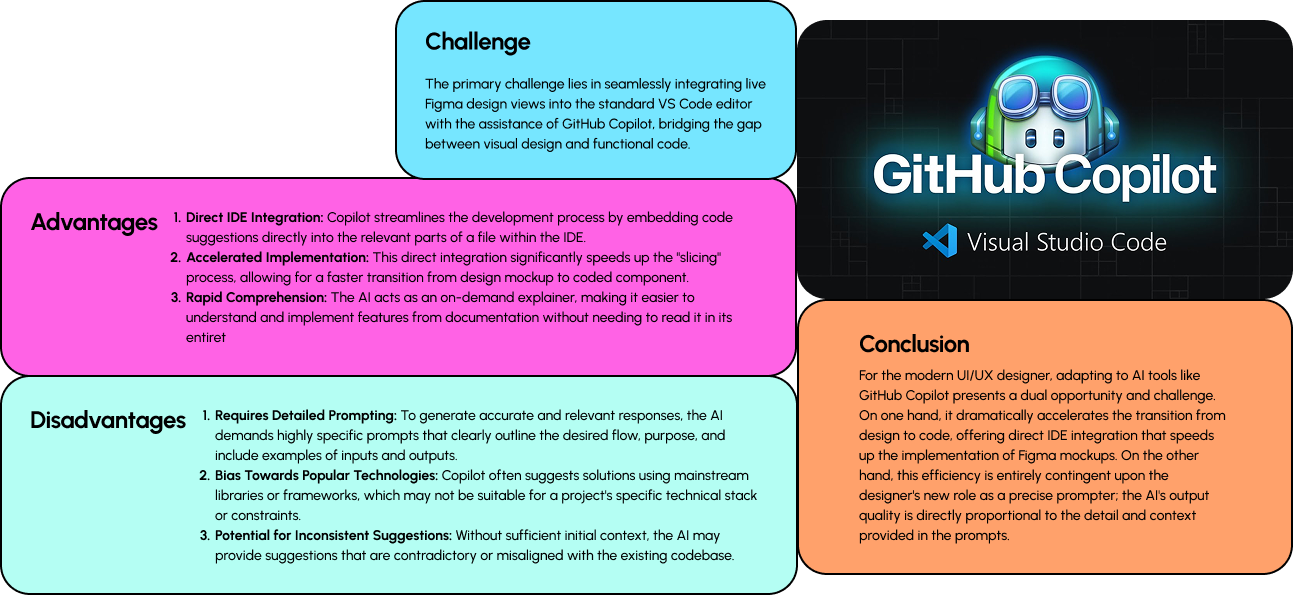

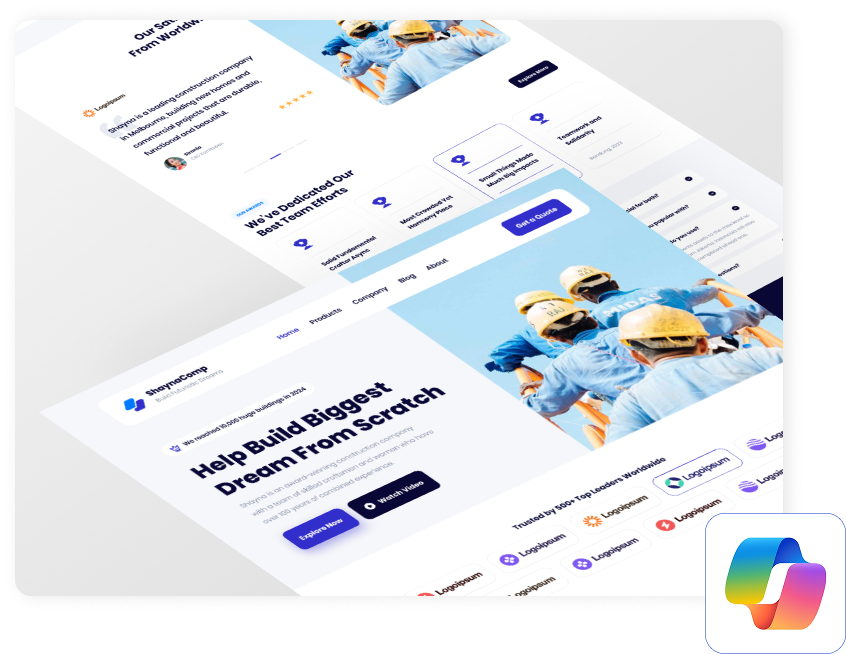

VS Code Copilot: A UI/UX Designer's Integration Case Study

This case study demonstrates how I leverage Copilot's ability to generate boilerplate code and repetitive tasks, freeing me to focus on high-level design problems, user research, and complex user flows. By guiding the AI with precise, technical prompts and then meticulously refining the output, I can bridge the gap between design and development, ensuring my vision is translated into clean, functional code with unprecedented speed and accuracy.

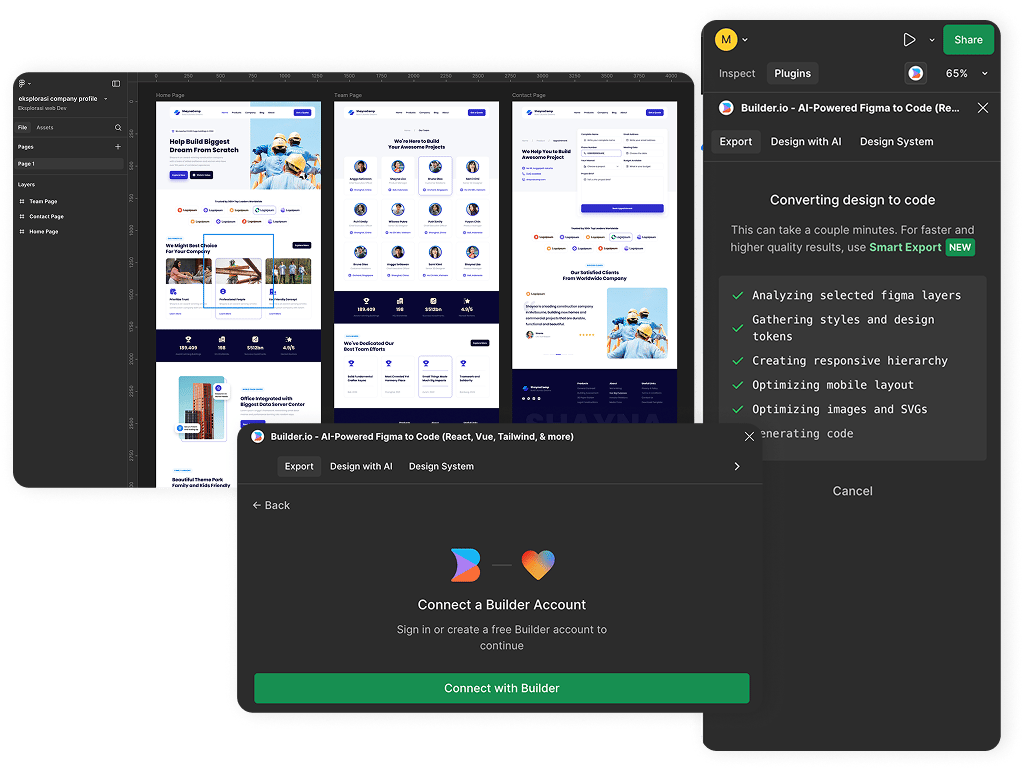

Adapting My Process: No-Code Integration with Builder.io

This represents a pivotal shift from creating static design artifacts in Figma to directly building dynamic, production-ready components. Platforms like Builder.io are at the forefront of this evolution, often leveraging AI to interpret design intent and accelerate development. For me as a UI/UX designer, this is not about avoiding code, but about gaining more direct control over the final product, enabling faster iteration, and ensuring the fidelity of the user experience is perfectly maintained.

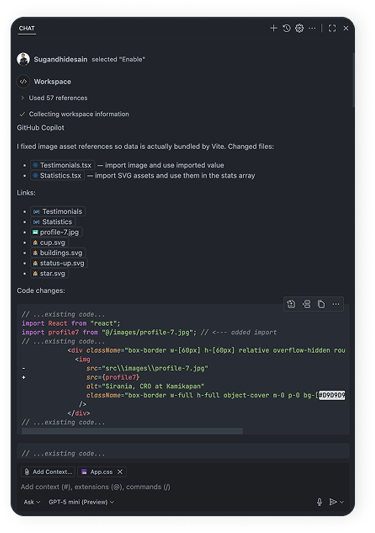

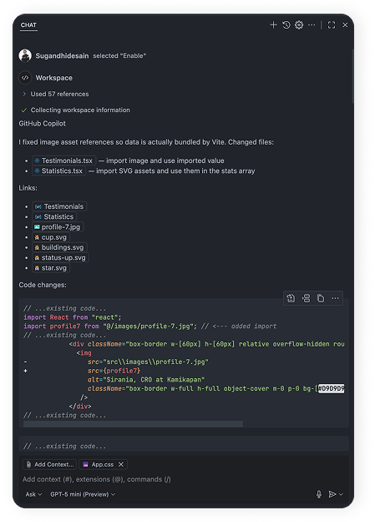

Designing the Input: Advanced Context Integration in GitHub Copilot

The process involves moving beyond passively accepting suggestions and instead actively engineering the context Copilot receives. By translating the core principles of a design system component properties, interaction logic, accessibility standards, and brand guidelines into a structured format that directly informs the AI, I can guide it to generate code that is a high-fidelity translation of the original design intent.

Notes on using vide coding in Copilot Ai

This section details my notes on adapting the "vibe coding" methodology to the specific workflow of GitHub Copilot. Unlike a fully conversational editor, applying this concept in Copilot is less about direct dialogue and more about meticulously structuring the context from which the AI generates suggestions.